Hive Smart VM - An explanation.

Hello Everyone,

Ever since writing the last Hive GPT report I have been considering writing a post that details the Hive Smart VM project... so here it is!

The Hive Smart VM project in its entrirety can be found here.

As noted in the report everything in this project is still in a testing phase and nothing is being written to the blockchain at this time. If you want a simplified explanation of what the Hive Smart VM project is about then read the previously mentioned Hive GPT report.

Since I have yet to decide on where to store the file chunks I have been sticking with a 64,536 character count for each chunk. Which leaves a little wiggle room for adding a file hash and the next (and previous) block number as well as any other metadata that I may want to include. This number is used with the idea being that a Hive post can contain roughly 65,000 characters. I say 'roughly' because I think the size is actually in bytes per block and not characters per block.

The reason that I chose that approach over using custom JSON data is very simple because custom JSON can only contain roughly 8,000 characters... which results in entirely too many chunks. Especially since I would ideally only write around 7,536 (or less) characters per chunk to accommodate the same extra information that I mention in the previous paragraph.

To give you an idea of the difference in the amount of chunks that would need to be written consider the following. If I chunk the 'hive_smart_vm.js' file (which is roughly 8.3 megabytes) with the former method I wind up with around 70 chunks that need to be written to the blockchain... but if I use the latter method I wind up with a whopping 596 chunks!

Please keep in mind that each chunk will also require its own fetch... so the amount of chunks used will also equal the amount of API calls required to reassemble the chunks into the original files.

In the long run I honestly do not know if one approach is better than the other... or if a combination of using both methods might be best. There is also the possibility of utilizing 'comments and replies' instead of a post... or again a combination of posts, replies and custom JSON.

It was also brought to my attention that I could possibly use the same post over and over again by making edits to it and having each edit be a separate chunk. This really might be the best approach (if using the post method) because it would keep the posts from appearing to be some kind of spam in the recent feed. The same could be done with comments and replies also. As far as anything in regards to using this 'editing' approach goes... I have yet to explore it or 'dig in' to how the editing works so I have no definitive answers, insights or ideas in that regard at this time.

The following is the main 'hive_smart_vm.js' script broken down into separate code blocks so that I can insert comments for this post between the sections. Please note that I have also abbreviated the Base64 encoding for the sake of brevity.

Begin hive_smart_vm.js content:

const https = require('https');

The above is the single import used. The reason that only the builtin Node.js 'https' module is used is for both security and simplicity.

Please note that 'zlib' gets imported later in the script.

// Function to get the public key from Hive blockchain

async function getPublicKey(username) {

return new Promise((resolve, reject) => {

const options = {

hostname: 'api.hive.blog',

port: 443,

path: '/',

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

};

const req = https.request(options, (res) => {

let data = '';

res.on('data', (chunk) => {

data += chunk;

});

res.on('end', () => {

try {

const response = JSON.parse(data);

if (response.result && response.result[0] && response.result[0].posting.key_auths.length > 0) {

const publicKey = response.result[0].posting.key_auths[0][0];

resolve(publicKey);

} else {

reject("User not found, public key not available, or key_auths array is empty");

}

} catch (error) {

reject(error);

}

});

});

req.on('error', (e) => {

reject(e);

});

// JSON-RPC request payload to get account details

const payload = JSON.stringify({

jsonrpc: "2.0",

method: "condenser_api.get_accounts",

params: [[username]],

id: 1

});

req.write(payload);

req.end();

});

}

The above is used to fetch the public key from an account. This is more or less placeholder logic that will be replaced later with logic for handling private keys.

// Function to get the current time from Hive blockchain

function getCurrentHiveTime() {

return new Promise((resolve, reject) => {

const options = {

hostname: 'api.hive.blog',

port: 443,

path: '/',

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

};

const req = https.request(options, (res) => {

let data = '';

res.on('data', (chunk) => {

data += chunk;

});

res.on('end', () => {

try {

const response = JSON.parse(data);

if (response.result && response.result.time) {

const timestamp = new Date(response.result.time + 'Z');

resolve(timestamp);

} else {

reject("Timestamp not found in response");

}

} catch (error) {

reject(error);

}

});

});

req.on('error', (e) => {

reject(e);

});

// JSON-RPC request payload to get the dynamic global properties

const payload = JSON.stringify({

jsonrpc: "2.0",

method: "condenser_api.get_dynamic_global_properties",

params: [],

id: 1

});

req.write(payload);

req.end();

});

}

The above is the method used to fetch the current time from a Hive node. Please note that it is fetching the 'global properties' and then parsing the 'timestamp' value from them. I think this can be simplified but I was unsuccessful at finding a way to directly fetch only the timestamp.

// Function to get the local system time

function getLocalTime() {

return new Date(); // Gets the current system time

}

The above is a very basic function to get the local time of the machine that is attempting to launch the VM.

// Function to format a timestamp in the desired format

function formatTimestamp(timestamp) {

return timestamp.toISOString();

}

The above is a method to format the timestamp to UTC so that both the local time and the blockchain time are compatible.

// Main execution block

(async () => {

try {

const username = 'username'; // Replace with actual username input logic

const publicKey = await getPublicKey(username);

console.log(`Public Key for ${username}: ${publicKey}`);

// First Hive time check

const firstHiveTime = await getCurrentHiveTime();

// Local time check

const localTime = getLocalTime();

// Second Hive time check

const secondHiveTime = await getCurrentHiveTime();

if (firstHiveTime instanceof Date && secondHiveTime instanceof Date &&

!isNaN(firstHiveTime) && !isNaN(secondHiveTime)) {

const firstHiveTimestamp = firstHiveTime.getTime();

const secondHiveTimestamp = secondHiveTime.getTime();

const targetTimestamp = new Date("2023-12-17T15:09:00Z").getTime();

console.log("First Hive Timestamp:", formatTimestamp(firstHiveTime));

console.log("Local System Timestamp:", formatTimestamp(localTime));

console.log("Second Hive Timestamp:", formatTimestamp(secondHiveTime));

console.log("Target Timestamp:", formatTimestamp(new Date(targetTimestamp)));

// Check if both Hive time checks are close enough and after the target time

if (Math.abs(firstHiveTimestamp - secondHiveTimestamp) < 1000 && // 1 second tolerance

firstHiveTimestamp >= targetTimestamp && secondHiveTimestamp >= targetTimestamp) {

console.log("VM should be booted now.");

await bootVM();

} else {

console.log("The VM boot is scheduled for a later time, or time check failed.");

}

} else {

console.error("Invalid Hive API response:", firstHiveTime, secondHiveTime);

}

} catch (error) {

console.error('Error:', error);

}

})();

The above does the following:

- The account name is set.

- The public key is fetched.

- The time from a Hive node is fetched.

- The local time is fetched.

- The time from a Hive node is fetched a second time.

- The difference in the times (between local and non-local) are compared to see if the difference is greater than one second.

- The target time (for when the VM can be launched) is set and checked against the current time.

async function bootVM() {

var zlib = require("zlib");

//await _g.unzip(Buffer.from('COMPRESSED','Base64'))

_g = {

zip: function gzip(input, options) {

var promise = new Promise(function (resolve, reject) {

zlib.gzip(input, options, function (error, result) {

if (!error) resolve(result);

else reject(Error(error));

});

});

return promise;

},

unzip: function ungzip(input, options) {

var promise = new Promise(function (resolve, reject) {

zlib.gunzip(input, options, function (error, result) {

if (!error) resolve(result);

else reject(Error(error));

});

});

return promise;

},

};

let consize = process.stdout.getWindowSize();

(async () => {

var fs = require("fs");

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2) + 1};${Math.floor(

consize[0] / 2 - 7

)}H[#10=======]`

);

if (!process.argv[2]) console.log("Initializing library...");

const delay = (delayInms) => {

return new Promise((resolve) => setTimeout(resolve, delayInms));

};

fs.writeFileSync(

"libv86.js",

await _g.unzip(

Buffer.from(

"H4sIAAAAAAA_ABBREVIATED_BASE64: THIS_IS_THE_LIBV86_JS_FILE",

"Base64"

)

)

);

let wasm = await _g.unzip(Buffer.from('H4sIAAAAAAA_ABBREVIATED_BASE64: THIS_IS_THE_V86_WASM_FILE','Base64'))

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2) + 1};${Math.floor(

consize[0] / 2 - 7

)}H[##20======]`

);

var V86Starter = require("./libv86.js").V86Starter;

fs.unlinkSync("libv86.js");

if (!process.argv[2]) console.log("Initializing BIOS image...");

const bios = new Uint8Array(

await _g.unzip(

Buffer.from('H4sIAAAAAAA_ABBREVIATED_BASE64: THIS_IS_THE_VGABIOS_BIN_FILE','Base64')

)

).buffer;

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2) + 1};${Math.floor(

consize[0] / 2 - 7

)}H[###30=====]`

);

if (!process.argv[2]) console.log("Initializing linux image...");

const linux = new Uint8Array(

Buffer.from(

"AAAAAAAAAAA_ABBREVIATED_BASE64: THIS_IS_THE_LINUX_ISO_FILE",

"Base64"

)

).buffer;

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2) + 1};${Math.floor(

consize[0] / 2 - 7

)}H[####40====]`

);

if (!process.argv[2]) console.log("Initializing STD stream...");

process.stdin.setRawMode(true);

process.stdin.resume();

process.stdin.setEncoding("utf8");

if (!process.argv[2]) process.stdout.write("\033c");

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2)};${Math.floor(

consize[0] / 2 - 7

)}HStarting Hive Smart VM...`

);

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2) + 1};${Math.floor(

consize[0] / 2 - 7

)}H[####50====]`

);

let initialized = !!process.argv[2];

if (fs.existsSync("NodeVM_autosave.bin")) {

let b = fs.readFileSync("NodeVM_autosave.bin");

var emulator = new V86Starter({

bios: { buffer: bios },

cdrom: { buffer: linux },

wasm_fn: async (env) =>

(await WebAssembly.instantiate(wasm, env)).instance.exports,

autostart: true,

fastboot: true,

initial_state: b.buffer.slice(

b.byteOffset,

b.byteOffset + b.byteLength

),

});

} else

var emulator = new V86Starter({

bios: { buffer: bios },

cdrom: { buffer: linux },

wasm_fn: async (env) =>

(await WebAssembly.instantiate(wasm, env)).instance.exports,

autostart: true,

fastboot: true,

});

if (!process.argv[2]) await delay(1000);

if (!process.argv[2]) process.stdout.write("\033c");

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2)};${Math.floor(

consize[0] / 2 - 14.5

)}HBooting Linux, Please standby...`

);

if (!process.argv[2])

process.stdout.write(

`\x1b[${Math.floor(consize[1] / 2) + 1};${Math.floor(

consize[0] / 2 - 7

)}H[######75==]`

);

var stackOut = [],

stackIn = [];

emulator.add_listener("serial0-output-char", async function (chr) {

if (chr <= "~" && stackOut.length > 47) {

if (stackOut.length == 48 && process.argv[2]) {

emulator.serial0_send(process.argv.slice(2).join(" ") + "\n");

} else if (

process.argv[2] &&

stackOut.slice(55).join("").endsWith("/root%")

) {

console.log(

stackOut

.slice(55 + process.argv.slice(2).join(" ").length)

.slice(0, -9)

.join("")

);

let state = await emulator.save_state();

fs.writeFileSync("NodeVM_autosave.bin", Buffer.from(state));

emulator.stop();

process.stdin.pause();

} else if (!process.argv[2]) process.stdout.write(chr);

//console.log(stackOut.slice(-99));

}

stackOut.push(chr);

if (stackOut.length > 100 && !process.argv[2]) stackOut.shift();

//if (stack.join("") == "\r\r\nWelcome to Buildroot\r\n\r(none) login: ") {

if (

stackOut

.join("")

.endsWith("Welcome to Buildroot\r\n\r(none) login: ")

) {

emulator.serial0_send("root\n");

if (!initialized) {

process.stdout.write("\033c");

initialized = true;

}

if (!process.argv[2])

console.log(

'For a list of commands, type "busybox" | to exit, press Ctrl+C'

);

}

});

process.stdin.on("data", async function (c) {

stackIn.push(c);

if (stackIn.length > 100) stackIn.shift();

if (

(c === "\u0003" &&

/\/{0,1}[a-zA-Z-\d_]+(%| #) $/.test(stackOut.join("")))

) {

// ctrl c

process.stdout.write("\033c");

let state = await emulator.save_state();

fs.writeFileSync("NodeVM_autosave.bin", Buffer.from(state));

emulator.stop();

process.stdin.pause();

} else {

emulator.serial0_send(c);

}

});

})();

}

The above is the logic used to boot the VM itself. It imports 'zlib' and sets up the logic to handle both Base64 data and Gzipped data that has been Base64 encoded. The libv86.js, vgabios.bin and v86.wasm files are all Gzip and Base64 encoded data but the linux.iso file is only in Base64 form.

When the VM is booting it looks for a 'save state' file named NodeVM_autosave.bin and if it does not exist it will create it. I was originally going to remove the logic around this but decided to leave it in because it might prove to be a good way to get data directly from the VM without having to setup networking capabilities within the VM itself.

End hive_smart_vm.js content.

Alright, that is all rather simple and straight forward but it fails to have the kind of robust security that I was looking for if this (or a similar method) is used to create smart contracts or simply for running a secure VM. So I implemented the next section of code and nested the 'hive_smart_vm.js' within it in its entirety.

Begin toad_aes_json.js content:

const crypto = require('crypto');

const fs = require('fs');

const os = require('os');

const path = require('path');

const https = require('https');

const zlib = require('zlib');

const readline = require('readline');

The above are the imports used by toad-js so that it can handle both the AES encryption and be able to launch the encrypted version of the hive_smart_vm.js script that is nested inside of it.

// Function to get the public key from Hive blockchain

async function getPublicKey(username) {

return new Promise((resolve, reject) => {

const options = {

hostname: 'api.hive.blog',

port: 443,

path: '/',

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

};

const req = https.request(options, (res) => {

let data = '';

res.on('data', (chunk) => {

data += chunk;

});

res.on('end', () => {

try {

const response = JSON.parse(data);

if (response.result && response.result[0] && response.result[0].posting.key_auths.length > 0) {

const publicKey = response.result[0].posting.key_auths[0][0];

resolve(publicKey);

} else {

reject("User not found, public key not available, or key_auths array is empty");

}

} catch (error) {

reject(error);

}

});

});

req.on('error', (e) => {

reject(e);

});

const payload = JSON.stringify({

jsonrpc: "2.0",

method: "condenser_api.get_accounts",

params: [[username]],

id: 1

});

req.write(payload);

req.end();

});

}

The above is the same function for getting a public key from a Hive node that is found in the hive_smart_vm.js script.

function getCurrentHiveTime(nodeUrl) {

return new Promise((resolve, reject) => {

const options = {

hostname: nodeUrl, // Use the node URL passed as a parameter

port: 443,

path: '/',

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

};

const req = https.request(options, (res) => {

let data = '';

res.on('data', (chunk) => {

data += chunk;

});

res.on('end', () => {

try {

const response = JSON.parse(data);

if (response.result && response.result.time) {

const timestamp = new Date(response.result.time + 'Z');

resolve(timestamp);

} else {

reject("Timestamp not found in response");

}

} catch (error) {

reject(error);

}

});

});

req.on('error', (e) => {

reject(e);

});

const payload = JSON.stringify({

jsonrpc: "2.0",

method: "condenser_api.get_dynamic_global_properties",

params: [],

id: 1

});

req.write(payload);

req.end();

});

}

The above is the same function for getting the time from a Hive node that is found in the hive_smart_vm.js script.

// Function to get the local system time

function getLocalTime() {

return new Date(); // Gets the current system time

}

The above is the same function for getting the local time of a user's machine that is found in the hive_smart_vm.js script.

// Function to format a timestamp in the desired format

function formatTimestamp(timestamp) {

return timestamp.toISOString();

}

The above is a method to format the timestamp to UTC so that both the local time and the blockchain time are compatible and it is also the same as the function found in the hive_smart_vm.js script.

function decodeAndDecompress(chunks) {

try {

const concatenatedData = chunks.join('');

const decodedData = Buffer.from(concatenatedData, 'base64');

return zlib.gunzipSync(decodedData).toString();

} catch (error) {

console.error('Error decoding and decompressing data:', error);

return null; // Return null or handle the error appropriately

}

}

The above is the logic used to enable the Base64 and Gzip features used in the hive_smart_vm.js script to also be available in the toad_aes_json.js script.

// Function to execute the JavaScript content

function executeJavaScript(jsContent) {

try {

// Evaluate the JavaScript code

eval(jsContent);

} catch (error) {

console.error('Error executing JavaScript:', error);

}

}

The above is the method used to execute the nested hive_smart_vm.js script that is nested inside the toad_aes_json.js script. I am not sure if using 'eval' is the best approach but it was the simplest way that I could find to execute the nested script.

function decryptData(encryptedData, secretKey) {

try {

// Assuming the IV is prepended to the encrypted data separated by a colon

const parts = encryptedData.split(':');

const iv = Buffer.from(parts[0], 'base64');

const encrypted = parts[1];

const decipher = crypto.createDecipheriv('aes-256-cbc', Buffer.from(secretKey, 'hex'), iv);

let decrypted = decipher.update(encrypted, 'base64', 'utf-8');

decrypted += decipher.final('utf-8');

return decrypted;

} catch (error) {

console.error('Error decrypting data:', error);

return null;

}

}

The above is the decryption logic used to decode the encrypted and nested version of the hive_smart_vm.js script.

// Function to prompt for decryption key

function promptForDecryptionKey(encryptedData) {

return new Promise((resolve) => {

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

rl.question('Please enter the decryption key: ', (inputKey) => {

const decryptedData = decryptData(encryptedData, inputKey);

rl.close();

resolve(decryptedData);

});

});

}

The above is a simple method for prompting a user for the decryption key to decode the encrypted hive_smart_vm.js.

// Main function to process embedded JSON data

async function main() {

// Extract the base64 encrypted data from TempFS

const base64EncryptedData = liveDnaData.dna_structure.Genomes.FileSystem.TempFS.join('');

// Prompt for decryption key and decrypt the data

const decryptedJsContent = await promptForDecryptionKey(base64EncryptedData);

// Decode and decompress if decryption is successful

if (decryptedJsContent) {

const decodedJsContent = decodeAndDecompress([decryptedJsContent]);

// Store the decoded content in a temporary strand

liveDnaData.dna_structure.TemporaryStrands.push({

name: 'tempStrand',

content: decodedJsContent

});

// Execute the JavaScript from the temporary strand

executeJavaScript(decodedJsContent);

} else {

console.error('Decryption failed or key was incorrect');

}

}

The above is the method used to extract the Base64 encrypted data from the JSON key named TempFS, prompt for the decryption key, decode and decompress the data upon successful decryption, store the decrypted data in a temporary strand within the JSON and then execute the javascript.

// Main execution block

(async () => {

try {

const username = 'username'; // Replace with actual username input logic

const publicKey = await getPublicKey(username);

console.log(`Public Key for ${username}: ${publicKey}`);

// Set up multiple Hive node URLs

const hiveNodes = [

'anyx.io',

'api.hive.blog',

// ... Add more nodes as needed

];

const targetTimestamp = new Date("2023-12-17T15:09:00Z").getTime();

let allNodesPassed = true;

for (let node of hiveNodes) {

const firstHiveTime = await getCurrentHiveTime(node);

const localTime = getLocalTime();

const secondHiveTime = await getCurrentHiveTime(node);

console.log(`Node ${node} - First Hive Time: ${formatTimestamp(firstHiveTime)}`);

console.log(`Node ${node} - Local System Time: ${formatTimestamp(localTime)}`);

console.log(`Node ${node} - Second Hive Time: ${formatTimestamp(secondHiveTime)}`);

console.log("Target Timestamp:", formatTimestamp(new Date(targetTimestamp)));

const firstHiveTimestamp = firstHiveTime.getTime();

const secondHiveTimestamp = secondHiveTime.getTime();

if (!(Math.abs(firstHiveTimestamp - secondHiveTimestamp) < 1000 && // 1 second tolerance

firstHiveTimestamp >= targetTimestamp && secondHiveTimestamp >= targetTimestamp)) {

console.log(`Node ${node} failed the time checks.`);

allNodesPassed = false;

break; // Stop checking further if any node fails

}

}

if (allNodesPassed) {

console.log("All nodes passed the checks. Executing main script logic.");

await main(); // Execute the main function after passing the checks

} else {

console.log("One or more nodes failed the checks. Exiting script.");

}

} catch (error) {

console.error('Error:', error);

}

})();

The above is the logic for setting the username, getting the public key, setting up the capacity to check the time from multiple nodes, setting the target time, checking the time and its one second time difference tolerance.

// Embedded JSON data (live_dna_data.json content)

const liveDnaData = {

"dna_structure": {

"kernel": {

"version": "1.0",

"tasks": [],

"communicationBus": {},

"metadata": {}

},

"Genomes": {

"OS": {

"v86": {},

"kernel": {}

},

"FileSystem": {

"TempFS": ["MnKoHz17MdFE_ABBREVIATED_ENCRYPTED_DATA: THIS_IS_A_ENCRYPTED_VERSION_OF_THE_HIVE_SMART_VM_JS_FILE"], // Leave this empty for now

"UserFiles": {}

},

"WebFrontEnd": {

"HTML": {},

"CSS": {},

"JavaScript": {}

},

"WebBackEnd": {

"ServerScripts": {},

"Database": {}

},

"Desktop": {

"UI": {},

"Applications": {}

}

},

"TemporaryStrands": [] // Create an array for temporary strands

}

};

The above is the JSON portion of the script where the the encrypted data is stored and where the temporary strand is located for the decrypted data to be written to. Please note that the JSON itself has extra entries (keys) because it was originally part of a different project and I have yet to remove the extra components because I may wind up using them later.

End toad_aes_json.js content.

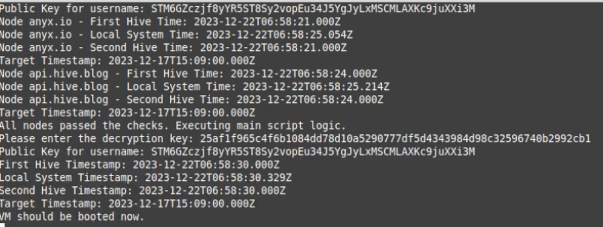

Here is an image of the VM start process when it is loaded via the toad_aes_json.js script.

The reason that it shows the multiple checks is because it executes the checks from both the toad_aes_json.js script and the hive_smart_vm.js script nested inside of it.