Research diaries #21: Open-source generative AI for videos with Comfy

As AI is already outperforming many human tasks it can be disruptive for many communities. I think it would be hard to stop or significantly slow down its advancement. AI is definitely also creating new opportunities as many jobs are also being aided by AI tools. At least something that would be definitely damaging is if all the tech behind it becomes proprietary. So I think it is great that more ground breaking open-source AI tools are being developed.

Attention grabber

Although openAI is called open we cannot independently run their networks. Other platforms like Fooocus and Nvideas Chat with RTX provide locally run machines. However, these are limited in the sense that significantly tweaking them is hard. So today I want to write about the next best thing to coding your own networks.

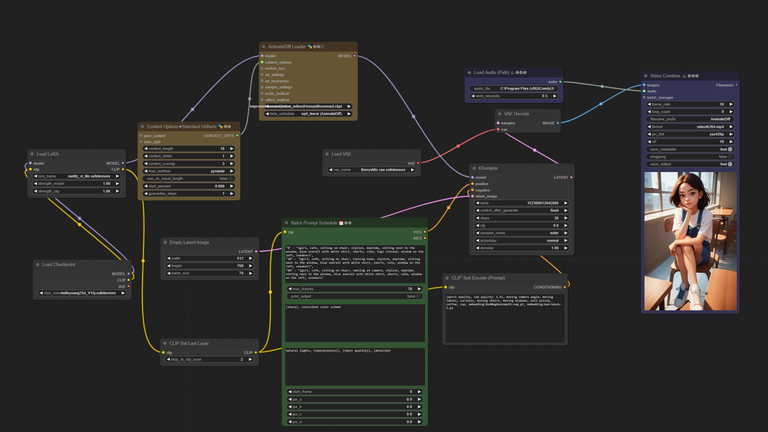

Comfy-UI architecture

Coding neural nets has become a somewhat visual process as in a great number of architectures you are connecting neural network operators in a specific way to each other. When putting this down in code this connection is not that transparent anymore. However, UI-peeps have created a platform where the whole procedure becomes visual: Comfy-UI. https://github.com/comfyanonymous/ComfyUI

In Comfy-UI you can download neural network operators and connect them to each other to build a neural network architecture for generative tasks. Ordinarily you would have data on which you train a neural network. However, this is an expensive task requiring a high end GPU. Comfy takes this into account as it is mainly meant for pre-trained networks. These can for example be found on https://civitai.com/ or on https://huggingface.co/. A caveat here is that pre-trained networks are usually trained under certain conditions. For example the data images could be of a certain size, this means that for optimal results you would have to generate images of this specific size.

Comfy-UI was originally intended just to generate images. Maybe this is not so impressive if you have played with DALL-E. The cooler thing is that you can use Comfy-UI for video generation. The prompt is frame based. So at a frame interval you specify a prompt. Let's go over the core of the architecture I built.

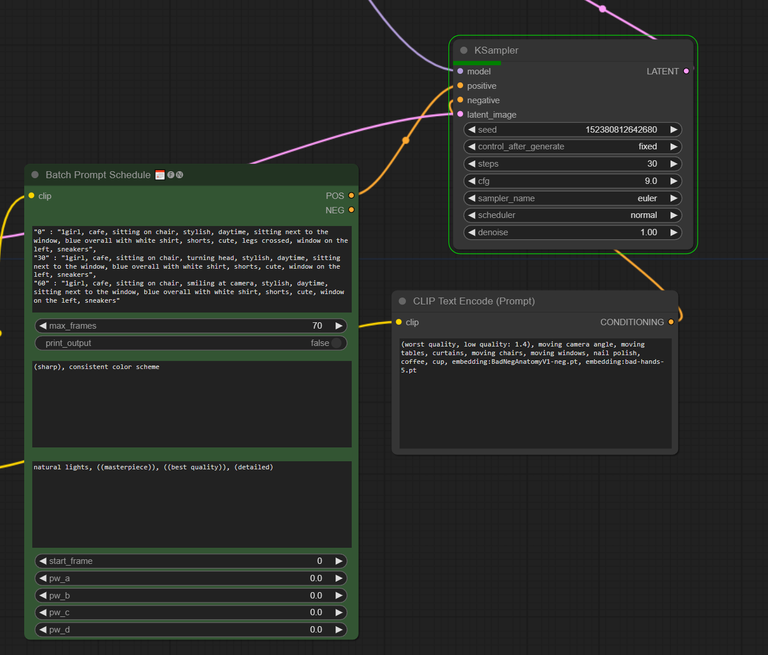

Zooming in on prompts and the sampler

The green block is the prompt scheduler. The "x" indicates at which frame you want to see what.

The animation here works via the pretrained networks of animatediff (https://github.com/guoyww/AnimateDiff). This is in no way perfect. Your model might suddenly get an extra hand or finger or you will see sudden things appear and vanish in the background. You can improve on this a bit by feeding it negative prompts. This is what the CLIP Text encode (Prompt) does. You could also implement this in Batch prompt scheduler if you want to do it scene based.

The two prompt blocks connect to the Ksampler. There is an underlying representation referred to as a latent which the Ksample distorts and then applies the prompts to. This yields a refined version following your directive. The Ksamplerhas many parameters you could tweak. There is no real guidance on how these will affect the generated video. It is mostly a trail-and-error process to find what works for your choice of pre-trained networks and architecture. But a good ground rule is to start as simple as possible. For example, there are many samplers to pick from but euler is the simplest.

Anyway maybe in a future post I will go through more of the details or add-ons to building a good workflow in Comfy-UI. And as always here is the cat tax.

Cat tax

Thank you for your witness vote!

Have a !BEER on me!

To Opt-Out of my witness beer program just comment STOP below

View or trade

BEER.Hey @mathowl, here is a little bit of

BEERfrom @isnochys for you. Enjoy it!Learn how to earn FREE BEER each day by staking your

BEER.Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider delegating to the @stemsocial account (85% of the curation rewards are returned).

You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support.

Thank you for your witness vote!

Have a !BEER on me!

To Opt-Out of my witness beer program just comment STOP below

View or trade

BEER.Hey @mathowl, here is a little bit of

BEERfrom @isnochys for you. Enjoy it!Did you know that <a href='https://dcity.io/cityyou can use BEER at dCity game to buy cards to rule the world.