Truth in the Age of AI: YouTube's AI-generated Disclosure Label

Many platforms and AI providers are taking ethical measures to curb abuse of AI, and YouTube has joined them with their new AI-generated labelling tool. Essentially, this would enable viewers on YouTube to quickly identify videos that may have AI-generated content or synthetically produced clips in them.

Just about anything is happening with AI now. In virtually every facet of our everyday lives, AI is causing some level of disruption. It is enhancing some of the functions across various tools, and it is bringing things to life—things that many have not thought of before.

The Rise of Text-to-Video AI Models

Text-to-video AI models like Sora, for example, that are on the rise lately are taking us into a future where a whole lot that would have been deemed very difficult to achieve, if not impossible, now seem doable with a simple prompt. The videos some of these models generate can pass off as real to unsuspecting viewers.

"How do we know what is real and what isn't?" The answer to that question is getting blurry with the rapid development of AI these days. One may not be able to tell if something really happened or not just by looking at it, and it could very well lead to more and more problems than we have seen with deepfakes lately. YouTube is seeking ways to control this.

The Challenge of Deepfakes

Imagine seeing yourself in a video doing or saying something that you really have never done or never would, but it looks so real to you that it baffles you. It could even make unsuspecting people believe that it's actually you in that video. That's an example of deepfake. And it can be very well achieved these days with AI.

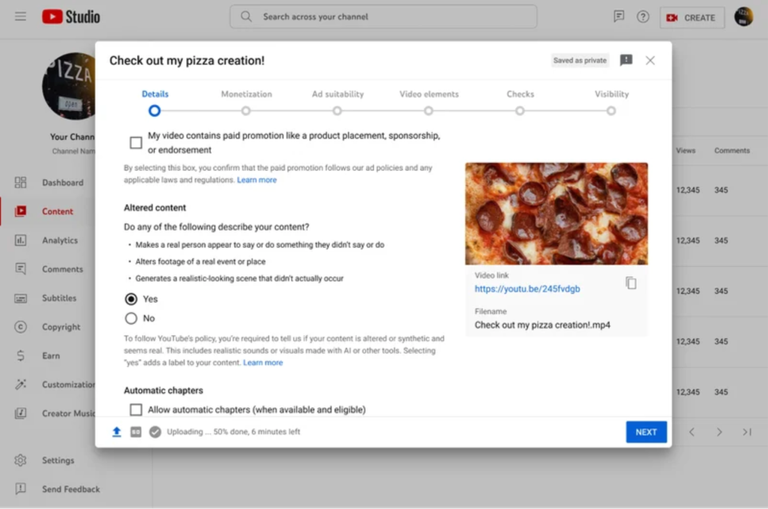

What YouTube has implemented now is to require every creator to indicate if they have anything AI-generated or synthetic in their video on YouTube while uploading. It's a checkbox that literally asks that, and creators have to mark it or not by "being honest."

The honest approach is what they are going with for now. YouTube has mentioned, though, that they are investing in tools to detect AI-generated content. I don't think that these "AI-generated detecting tools" would really ever work, though, especially with how it's increasingly getting hard to differentiate between real and fake with AI now. And this is just the dawn of AI.

The Role of Blockchaini in Content Verification

In the meantime, as YouTube only has the honesty approach to offer, they will actively look out for and label AI related content themselves, especially when creators "fail" to do so when uploading.

So what we will see in the description of an AI-related video is the label that it contains something by AI or something synthetic.

To some degree, this can effect the averse effect of AI-generated videos by informing unsuspecting viewers about videos that may contain tings that aren't exactly real but looks it. Most people would want to know that their favourite celebrity—say, a musician—didn't actually do or say something, even though it feels so real.

One viable solution I see for verifying content is blockchain technology. With blockchain, roots of data can be traced and attributed to a source. And so, consumers of content can feel a little safe knowing where content they are consuming is coming from and determining if it's trustworthy.

Impact on Creators and Consumers

One fundamental feature of the blockchain is that it is tamper-proof. What you put there isn't changeable. And this is something that content creators may need to do to help their consumers "trust" them and their content. In a way, this could tackle deep fakes, bring integrity to deserving brands, and nab deepfake perpetuators. Especially in these times when politics are heated and information is crucial in our everyday lives, the veracity of content is very critical.

Until that time comes where we see implementations of working measures to curb deepfakes and protect consumers, YouTube would have to work with their checkbox method. It might only be a little weird for creators to mark that checkbox, indicating that something in their video is synthetic or AI-generated. Or maybe not.

What are your thoughts on this and how can it affect you as a creator or consumer?

Image 1, 2

Interested in more? Meet the Humane AI Pin: Voice, Gesture, AI – No Screens Needed! The Link: Bridging Minds and Machines with Neuralink's Brain Chip Advancing Safety and Privacy: The Role of AI in DoorDash and Nijta's Initiatives Posted Using InLeo Alpha

We shouldn't be surprise about the negative outburst of AI this days. Data integrity is really hunted making consumers feel skeptical to media info. The inception of check box will not curb the entire AI practices anyway. There is a need to be site sensitive this days.

The abuse of AI was bound to happen anyway. It's not AI that's the humans but humans. All that we can so is try to curb it. But of course, the checkbox won't do so much. We just have to be careful on our own as consumers.

This post has been manually curated by @bhattg from Indiaunited community. Join us on our Discord Server.

Do you know that you can earn a passive income by delegating your Leo power to @india-leo account? We share 100 % of the curation rewards with the delegators.

100% of the rewards from this comment goes to the curator for their manual curation efforts. Please encourage the curator @bhattg by upvoting this comment and support the community by voting the posts made by @indiaunited.

I'm loving how the interactions and engagements of AI is turning into. Protecting users is important.

Making AI safe to use and interact with has been a top priority for most AI providers these days. It's very important.