The Race To Get The Better GPU Possible, Might Be Nearly Over

I wanted to use the same kind of picture but on a 4080 card under a different context. Regardless, it's a throwaway image for what's really happening. GPU prices are actually back to normality (sort of). Not for what you and I wanted to think, but whatever problems there be, I have a better idea for your performance woes. Things are going to get better from here.

The sad reality is, we have to accept that the concept of GPU pricing no longer fits what rightfully should be. Nobody knows what's really going on regarding manufacturing, and distribution. After the troubling reality that most of the GPUs we're seeing now are old stock, until the world problems, and politics revolving around it ease down, we won't be seeing new GPUs like the RTX 4000 or RX 7000 series GPUs anytime soon.

So buckle up, time to squeeze what's left of your hardware as much as possible. The realization that you need to constantly upgrade your GPU out of resolution change or needing higher FPS, no longer comes with high cost. The easiest upgrades are elsewhere.

Tinkering With The Settings

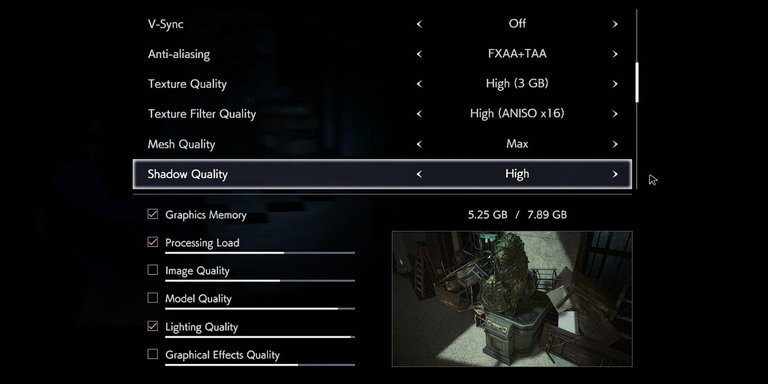

This is something a few people know of already, but there are many things to look at before I talk about neat new things both GPU and CPU manufacturers are trying to put out. We live in an era where excess is a thing to get maximum fidelity whenever they can.

Most games these days will cramp as much detail with the highest preset possible. The issue is, there are always some settings that are set that'll take in a bit of excess GPU resources. For that case, it would be better to just lower the settings down

Big examples such as anisotropic filtering, the max setting is always upto 16x. This is where the details look very refine, but dialing it down to 8x won't change much unless you use a bigger display or have keen eyesight to notice things. This gives you a small boost, but it's one small step for a list of options to pick. Such as anti-aliasing, ambient occlusion, view distance, foliage, effects, motion blur, light bloom, etc.

Anti-aliasing is the biggest drawback, most games allow use of MSAA, but the cheaper alternative would be FXAA or TAA. In some cases, it's better to leave it at 2x MSAA or SMAA. Modern games use different rendering techniques that would hide jaggies or stairway effects in addition anyway. The most used ambient occlusion is SSAO, by default serves its purpose, newer games uses SSAO+, instead of HBAO, which is very demanding.

I honestly didn't mind toning down the view distance and foliage rendering infront of me for games like GTA V, lower just a little to save both precious CPU and GPU resources. Lighting and effects rendering aren't much of a bother, unless you're playing a game with those in overabundance. Last, but not the least, I want to touch on is; Ray-Tracing.

Ray-Tracing is the hot new thing, because it changes a lot. Almost every component of visual rendering, including shadows, lighting, and most of all reflections now can mimic reality thanks to applying much in-depth and complex mathematical programming. Unless you have a really powerful card with RT capabilities, you're out of luck regarding certain games like Cyberpunk 2077, Control, Metro Exodus, etc. Cyberpunk is an already demanding game, turning RT close to max tanks the performance. My 6700 XT running in the highest preset, stays mostly at 30-35FPS. They are long ways off not only optimizing this feature, but also letting people scale manually to deal with performance loss.

This next segment, will focus more now on newer tech that resolves by using different rendering methods regarding scaling and resolution.

Resolution Scaling Wars

Ah yes, if the above was too difficult for you to work through with, well then you should read on some of the features below. If you own an RTX GPU, you have access to one called DLSS. This exclusive features use the Tensor cores provided to prompt an image scaling program based on an A.I. based algorithm. You play at a lower native resolution than you've selected, but the image is upscaled using super sampling technique with the A.I. to determine importance of details.

It's complicated, but the end results now stand with DLSS 2.0 that it looks phenomenally good, while providing 20-50% performance increase. It has some drawbacks, but for the most part, it works as a miracle solution to the low performances blow dealt to your PC. Not a lot of games have this implemented, but as time will go on, most games eventually will. There's just one problem tho; it is exclusive to RTX GPUs, and not a lot of people own those right now. But a better alternative already exists and this is where AMD comes in with their FSR, and that one's a freebie.

It is open-sourced, and it applies to anyone's GPU, including older ones. One of the many blessings AMD has given us, though it comes with caveats, one being that it doesn't net the same results as DLSS does and there are significant visual blemishes easily spotted. FSR 2.0 is an updated version, that was tested with Deathloop to showcase large improvements, including on the visual side. This works toe-to-toe with DLSS now. However, not many games uses it, though, that is slowly changing.

There are other GPU driver provided ones like RSR, which almost works the same as FSR, just about on any other games you launch. Nvidia and AMD aren't alone on this, most games now has resolution scaling where lower than native resolution increases FPS but at cost of visual fidelity. Amping up anti-aliasing while doing this kind of helps too. Then there's Unreal Engine's own proprietary TSR, which functions similarly to FSR, available from games running in UE5.

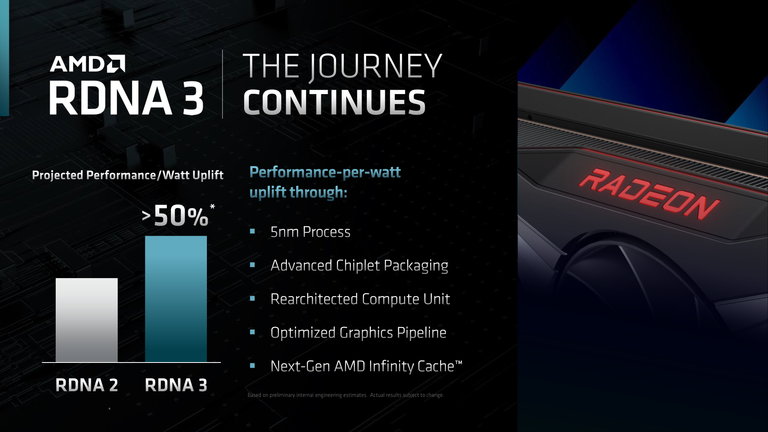

In-game softwares are great, but what about say something beneficial from the CPU hardware side of things? AMD did introduce Smart Access Memory, that uses its CPU's infinity cache in connection to any RX 6000 GPU to have faster access to memory, increasing performance upto 15%. This feature is also similar to resizable-BAR, which Nvidia uses, but AMD's SAM nets more results somehow (works long as you're using both AMD CPU and GPU).

An Unpredictable Market

New GPU rumors are already floating around. Some are saying the cards will be really expensive, that they'll also be consuming way more power than before, and worst of all, Nvidia has asked TSMC to reduce production of 5nm wafers thanks to low demand. Similarly to what AMD is doing. But these are all rumors, mostly. Though not unsolicited, since people are expecting big things for the next generation.

Other problem is that, most people have started to buy out GPUs now that their pricing has gone down and is still on that trend. Who knows for certain if the newer ones will beat the old ones on price/performance ratio. Or they'll be expensive and very power hungry.

I think we've reached a point, thankfully, where we don't have to deal with FOMA thanks to these features lying around for good use. Sometimes it's ok to tinker with the settings a bit, even if you're in the master race, you can dial things down. If not, maybe wait for modders to insert FSR 2.0 mods in majority of games, just like they did with Cyberpunk 2077 recently.

The market is settling down, Intel Arc is slowly and surely entering the market, despite not meeting expectations, things are actually going well for them. And if it goes accordingly, team red and green might have to step up against their new competition. If you've saved up, you can finally buy a GPU at the price right, if you've already bought on, you're still longways off from changing it thanks to above reasons specified. It's a win-win. End of the day, buy what you want, when you can.

I was waiting for the price to go down a bit and now it seems like its gonna take eternity.

They are still going down, the crypto mining market is dead. There are a lot of used GPUs out there

I don't believe in the used GPU's especially the one that was used for mining purposes. And I stopped reading news about the price so I didn't know the price is going down. I need to check again it seems.

I really didn't bother about GPUs, because after all, if I want to, it is definitely cheaper to just buy NVIDIA's Geforce Now... it's like renting a GPU in some ways and all you need is good internet. I don't really play a lot of demanding games anyway, so there's also not much motivation to upgrade. Intel integrated graphics all the way xd.

Although that probably means that I need to upgrade my internet... but hey, that does sound more useful than a graphics card...